The Autonomous Future

of Mobility

In the last two decades, autonomous driving has advanced from research to reality. Autonomous vehicles offer tremendous promise, from reducing emissions and congestion in combination with electric power and ridesharing to decreasing the number of driving accidents and deaths. However, autonomous driving is still in its early stages, with safety relatively unproven and the spatial and logistical implementation of AVs not yet adequately explored. In fact, Despite AVs’ potential, their widespread implementation is liable to exacerbate these problems. Adversarial attacks on artificial intelligence pose significant threats to safety, and creating trustworthy AI is a challenge. AV ridesharing is likely to reduce the cost of individual travel. Furthermore, with no need for engaged driving, riders may work while traveling and increase their commute length—increasing vehicle load, energy consumption, and environmental consequences.

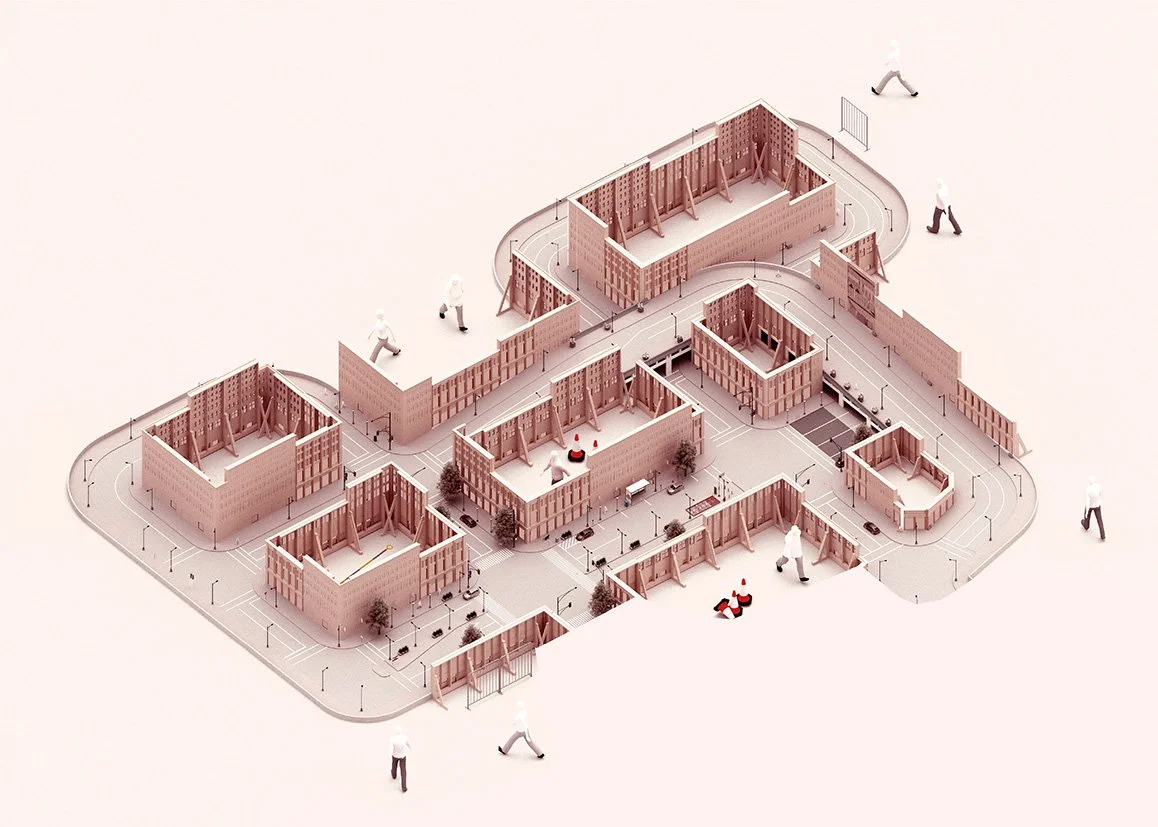

The core innovation of our interdisciplinary project is the development of an autonomous driving platform that enables intermediate-scale physical experimentation, combining knowledge from architecture and computer science. The research team has built a 1:8 scale physical environment for testing an F1/10 model AV with a full perceptual stack. This scale physical model of an urban driving environment is 90’ by 30’ and is located in McKelvey Hall. The model’s modular design can be transformed to maximize scenarios for the miniature AV to encounter. From an engineering perspective, the testing platform enables the team to evaluate the limits of autonomy at significantly lower costs and greater scope of stress-testing than full-scale facilities. From an architectural perspective, the model will allow the team to examine how AVs can be integrated into cities in ways that improve public health and safety, encourage equitable access to public transit, and support climate resilience during Phase II of the project.

The model city is an immersive environment in which building form, public space, material characteristics, and atmospheres are controlled and transformed over time, similar to a film set. Work is being conducted across software that records real-world environments and applications that model digital environments. We have also been compositing digital and hand fabrication techniques that shape three-dimensional form with those that apply two-dimensional color and image—3D printing, laser cutting, CNC routing, and analog tactics. The project examines what digital fabrication techniques could best achieve equivalency with real-world conditions. Material qualities like color, texture, sheen, opacity, translucency, albedo, and luminosity are tuned to sensing technologies and layered onto architectural form and urban space. In addition, atmospheric interference like fog, smog, and glare can be modeled in the environment.

Location: Washington University in St. Louis

Schedule: 2019-2022

Principal Investigator: Constance Vale and Yevgeniy Vorobeychik, Ph.D.

Research Assistants: Carlos Cepeda, Flora Chen, Zhuoxian Deng, Ryan Doyle, Lily Ederer, Xiaofan Hu, Marshall Karchunas, Yun Lee, Min Lin, Kevin Mojica, Chuchu Qi, Mason Radford, Olga Sobkiv, Alexis Williams, Yi Wang, Yuzhu Wang, Sharon Wu

Materials: wood, plywood, 3d print